Soon (hopefully) Mac user will get MLX support for Ollama: https://github.com/ollama/ollama/pull/9118 – it’s said to be ~40% speed improvement, but it’s not for every model (it has to be MLX version). For real results I think we probably need to wait a bit.

From other hand we can already see some meaningful comparisons e.g. here: https://www.reddit.com/r/ollama/comments/1j0by7r/tested_local_llms_on_a_maxed_out_m4_macbook_pro/

MLX does the job, but not really 40% as suggested: Qwen2.5-72B-Instruct (4bit) 10.86 tokens/s vs 8.31 tokens/s it’s about 30% increase still very good result.

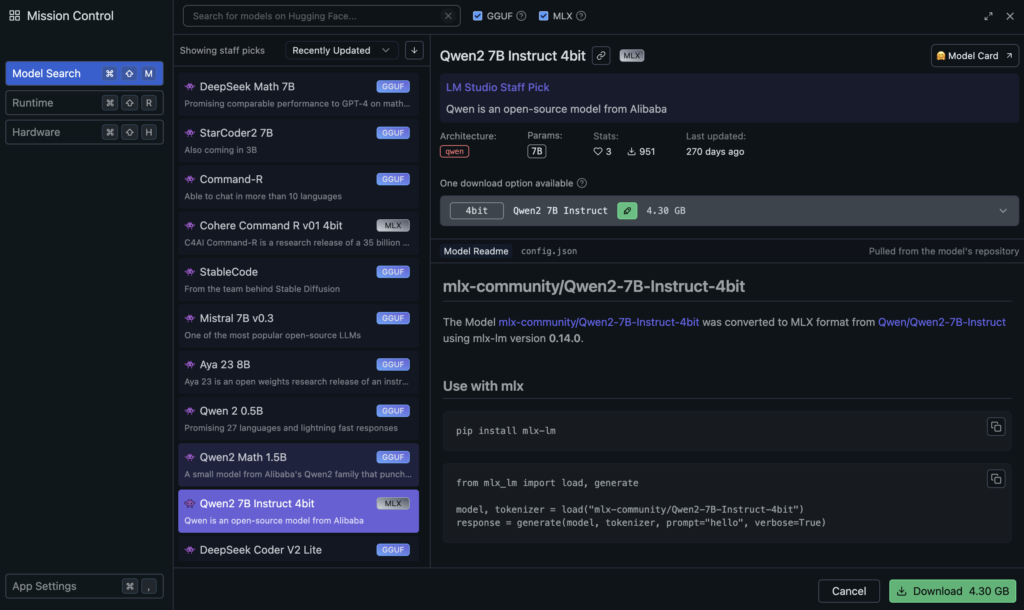

LM Studio on Mac can be installed from https://lmstudio.ai/, and MLX can be chosen in Discover. You can have access to API from https://lmstudio.ai/docs/api and use it in e.g. Continue (https://docs.continue.dev/customize/model-providers/more/lmstudio). Good results in autocomplete we can get with DeepSeek Coder V2 Lite Instruct 4bit mlx.