Several significant advancements and initiatives in AI, agent systems, and related technologies were announced recently, showcasing rapid growth, groundbreaking research, and new applications across industries.

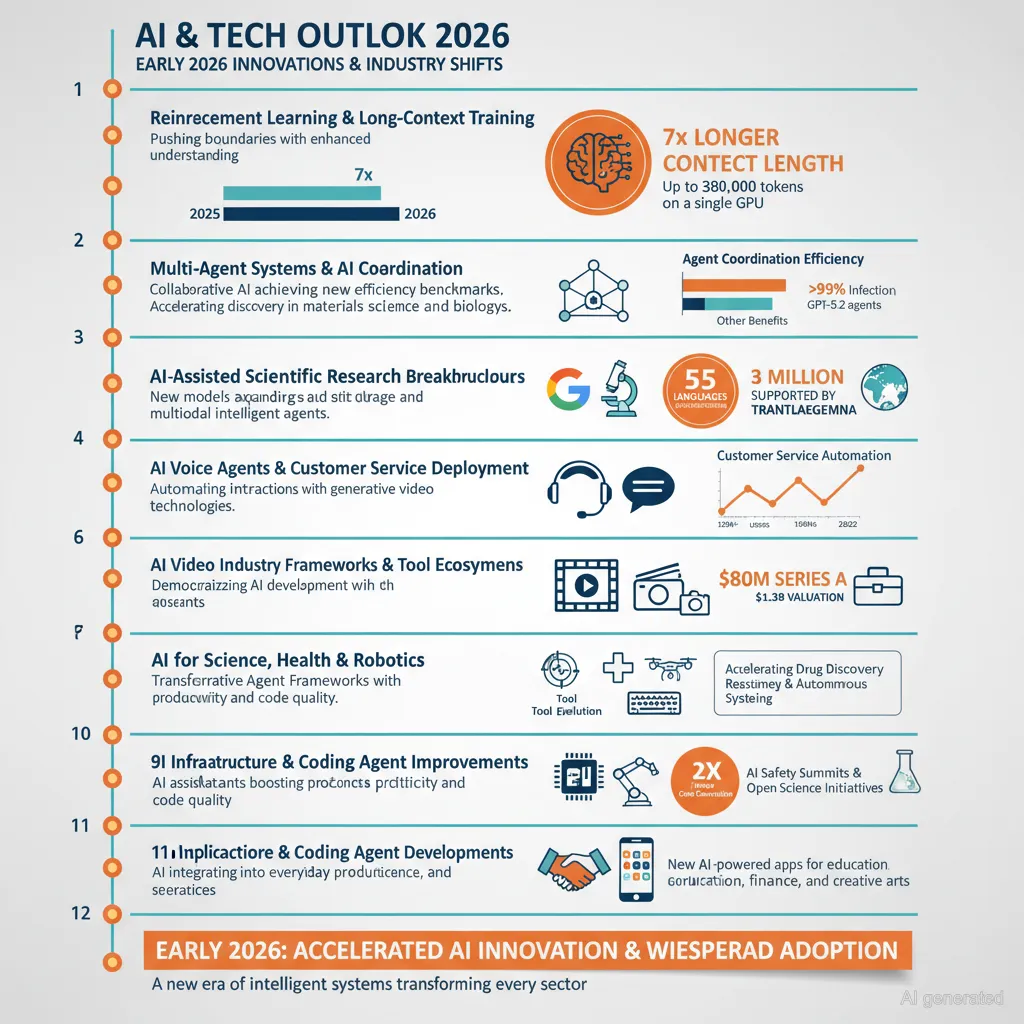

Reinforcement Learning and Long-Context Training

New batching algorithms now enable reinforcement learning (RL) training with up to 7× longer context lengths without any loss in accuracy. This breakthrough allows training large language models-such as the open source gpt-oss with GRPO-to reach context windows up to 380,000 tokens on a single 192GB GPU. These innovations promise to improve the efficiency and scalability of RL systems, essential for more complex reasoning and long-horizon tasks.

Multi-Agent Systems and AI Coordination

Research on multi-agent language model systems addressed the challenge of coordinating agents under spatiotemporal partial observability. The MACRO-LLM method enables several agents, each with limited local information, to negotiate and revise joint action proposals for cooperative reasoning. Tested in scenarios like adaptive car platooning and pandemic control, MACRO-LLM outperformed traditional multi-agent reinforcement learning by cutting infections by over 99% in simulations while scaling reliably. Additionally, Cursor demonstrated a remarkable autonomous coding project where GPT-5.2 agents autonomously built a browser from scratch in one week, generating over 3 million lines of code across 1,000 files. Their results highlight the power of planning and subtask delegation without inter-agent communication to avoid drift and duplication.

Breakthroughs in AI-Assisted Scientific Research

Grok 4.20, a new state-of-the-art model, showed dramatic capability in advanced mathematical research by independently discovering a novel Bellman function, improving known bounds for complex probability and Boolean problems that took human experts years to analyze. Another paper introduced test-time tool evolution, allowing language models to dynamically create and refine scientific reasoning tools during problem-solving, surpassing static tool libraries. These developments represent significant steps toward AI systems that accelerate discovery and create reusable knowledge assets.

Google’s Gemini and TranslateGemma Launches

Google introduced Gemini Personal Intelligence, enabling AI to connect securely with user data across Workspace apps such as Gmail, Calendar, Photos, and YouTube to provide personalized, context-aware assistance-for example, suggesting travel plans or retrieving details from emails and photos. Complementing this, they released TranslateGemma, a suite of open multilingual translation models ranging from 4B to 27B parameters supporting 55 languages, including low-resource ones. These models outperform larger baselines and run efficiently across devices from mobile to GPUs, and retain multimodal capabilities like translating text embedded in images.

AI Voice Agents and Customer Service Deployment

A partnership between ElevenLabs and Deutsche Telekom will soon deploy realistic AI voice agents to augment customer service for Europe’s largest telecom provider. Available via app and phone, these agents function 24/7 with minimal wait times and human-like interaction quality, marking a significant advancement in accessible voice technology aimed at democratizing AI-powered support.

AI Video and Media Scaling Rapidly in Industry

The AI video startup Higgsfield raised an $80M Series A extension, reaching a valuation exceeding $1.3 billion with a $200 million annualized run rate within 9 months and 15 million users globally. Their platform focuses on social media marketing applications requiring speed, volume, and brand consistency over cinematic perfection, establishing AI video as a foundational content production infrastructure. The company plans 200 product releases in 2025, reflecting an aggressive development pace.

Open Source Agent Frameworks and Tool Ecosystems

Openwork AI debuted an open-source, fast, and secure computer-use agent combining favorite AI modules with enhanced privacy that avoids using the main browser instance, supporting any model provider. Open Responses, a new open-source specification, was also introduced to standardize multi-provider LLM interfaces, improving consistency and extensibility across AI workflows. Claude Code’s subagent system exemplifies modular AI agent orchestration by spawning dedicated threads for subtasks, improving maintainability and long-term planning.

AI for Science, Health, and Robotics

Initiatives like ARPA-H’s PCX program, aggregating pediatric hospital data for accelerated cancer care research, and research showcasing smartwatches as accurate gait speed monitors exemplify AI’s expanding role in health. Meanwhile, progress in robotics includes humanoid robots tested successfully in industrial logistics with Siemens and light drone robots developed for solar panel cleaning to optimize energy yield.

Developer Tools and Coding Agent Improvements

The coding experience continues to evolve, highlighted by Replit’s sales training simulator compressing rep ramp time, GitHub Copilot’s agent memory improving context retention and code review effectiveness, and Opus 4.5 acting as an intelligent code interpreter. Developers are encouraged to leverage AI-driven planning and subagent orchestration to handle long-running tasks, reducing operational friction and boosting productivity.

AI Infrastructure and Compute Scaling

Compute capacity for AI has been doubling every 7 months since 2022, faster than Moore’s Law, driven largely by NVIDIA GPUs (>60%) along with Google TPUs and Amazon Trainium. With such infrastructure growth, bigger and faster models can be developed and deployed, shifting the competitive advantage toward raw speed and efficient inference. NVIDIA announced its Rubin platform delivering up to 10× cost reduction in inference tokens and 4× fewer GPUs needed for MoE model training.

Applications and Ecosystem Developments

New AI-powered frameworks enable non-technical users to create full-stack mobile apps with database, authentication, and integrations. Several breakthroughs in video world models afford more natural 3D control of scene composition, while new libraries improve prompt optimization, semantic search based on filesystems replace cumbersome tool systems, and platforms like Listen Labs revolutionize customer feedback with rapid, scalable interviews.

Community and Industry Movements

Exciting career moves include Ahmad joining Airbnb as CTO, leveraging their world-class design and engineering capabilities to apply advanced AI models to consumer experience. The launch of Merge Labs aims to bridge biological and artificial intelligence through novel brain-computer interfaces, aiming for transformative impacts. Bytedance released SeedFold, a new protein folding model outperforming AlphaFold 3, signalling AI’s growing role in life sciences.

In summary, the landscape in early 2026 is marked by accelerated AI model capabilities, unprecedented compute scale, practical multi-agent coordination breakthroughs, and a strong shift toward personalized, integrated AI assistants and tools. Across industries, notably in video, customer service, robotics, and scientific research, AI-driven automation is redefining workflows and unlocking value at scale, while open standards and open source frameworks foster community-driven development and inclusivity. The rapid pace of innovation suggests profound changes ahead for software development, scientific discovery, and everyday AI interactions.