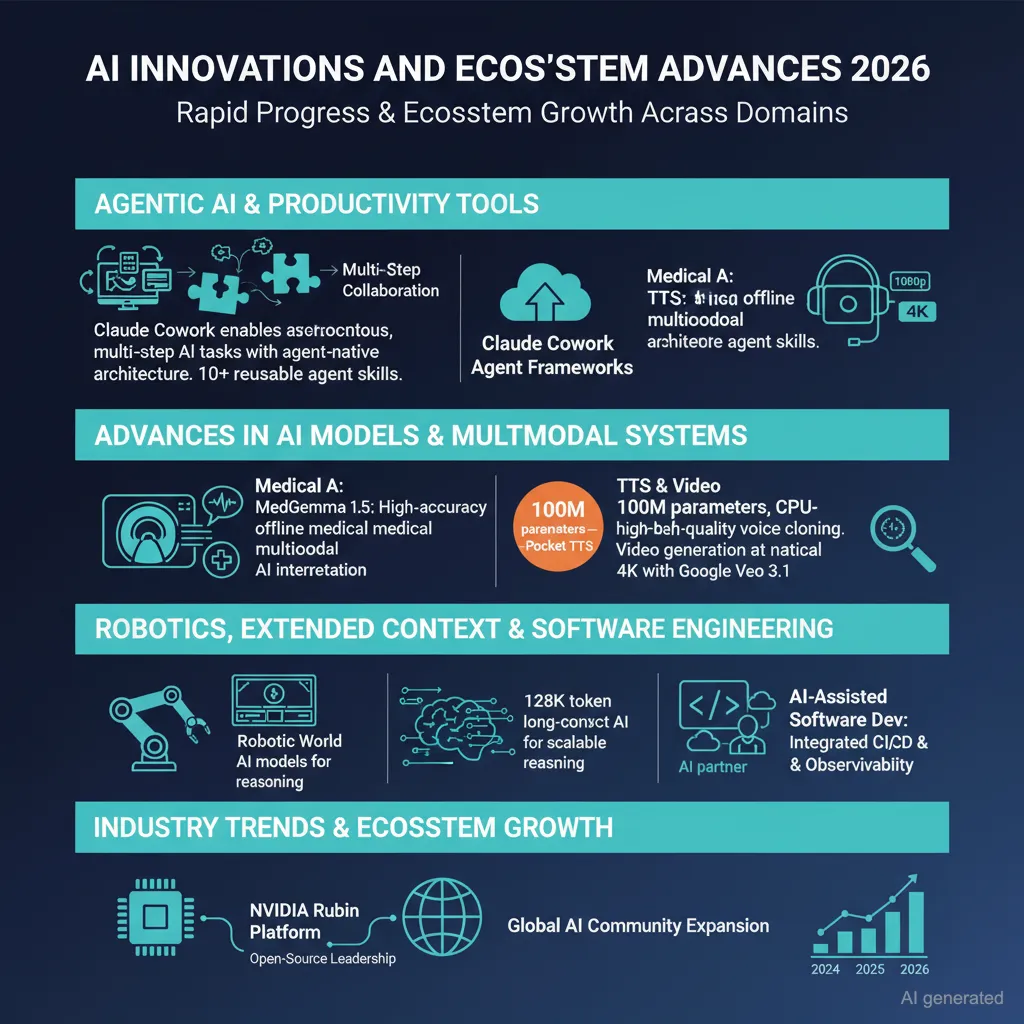

Multiple recent developments demonstrate significant progress and innovation in AI technologies across various domains including agent interaction, video generation, voice synthesis, robotics, medical AI, and open-source contributions.

Agentic AI and Productivity Tools

Anthropic recently launched Claude Cowork, a new interface designed for asynchronous, real-world task collaboration beyond simple chat. It allows users to send tasks that run locally on their computers, accessing files and browser information with autonomous capabilities like file reading, writing, and organizing. Cowork is based on Claude Code technology and emphasizes “agent-native” applications that differ fundamentally from chat-based interaction, supporting long-running, multi-step operations with queuing, browser automation, and task isolation through a built-in virtual machine. Pricing and system design suggest it targets power users in enterprise settings rather than general consumers. The product aims to remove distribution barriers for AI in organizations and complements Claude Code’s power-user oriented coding features.

Several AI frameworks and tools facilitate building and deploying intelligent agents quickly and effectively, such as LangSmith Agent Builder, OpenCode combined with MiniMax M2.1, and Vercel’s agent-browser. The agent ecosystem now includes skills as reusable modules extending functionality and enabling better orchestration of workflows, as seen in Google Antigravity’s Agent Skills and Anthropic’s Claude Skills. Furthermore, research works like EvoRoute demonstrate self-routing of LLM agents for optimizing cost and latency by dynamically selecting appropriate models for sub-tasks in workflows.

For developers and non-technical users alike, workflow acceleration through AI is evident in rapid app building (e.g., full-stack web apps created in under 30 minutes using Claude and related tools), automated business intelligence (e.g., AI data analysts like Fabi 2.0 connecting to multiple data sources), and intelligent automation for emails, code instrumentation, and observability. Integration with mainstream platforms like Slack enhances collaborative AI capabilities within existing communication tools.

Advances in AI Models and Multimodal Systems

Upgrades in medical AI include MedGemma 1.5, an open medical multimodal model capable of high-accuracy interpretation of CT, MRI, histopathology, and 2D imaging plus medical text, paired with MedASR-a specialized medical speech-to-text model reducing transcription errors significantly. These models aim to provide offline capability along with integration on platforms like HuggingFace and Google Vertex AI, empowering healthcare developers.

In text-to-speech (TTS), Pocket TTS offers a lightweight, open-source 100M-parameter model capable of high-quality voice cloning on CPUs without requiring GPUs, bridging the gap between large, GPU-intensive models and smaller, inflexible ones. Gladia’s focused benchmark highlighted the importance of entity accuracy over traditional word error rate metrics, with its model outperforming competitors in noisy environments and supporting dynamic multilingual transcription.

Video generation and editing have also improved remarkably. Google’s Veo 3.1 now supports more expressive videos, native vertical formats, better consistency, and upscaling to 1080p and 4K. Companion tools and workflows integrate masking and multi-angle 3D reconstruction, enabling new possibilities in film, narrative control, and digital effects. AI frameworks such as Stream’s Vision Agents allow live video understanding for scenarios like sports coaching and threat detection.

Robotics, World Models, and Long-Term Context

Recent research highlights progress toward generalized robotic intelligence via video pretraining and latent action spaces learned from unlabeled real-world videos, facilitating goal-conditioned planning and action transfer even in complex environments without explicit action labels. The NEO robotic agent demonstrates accurate control through text-to-video-conditioned world models.

For extended-context AI, novel architectures employing test-time training and meta-learning allow processing sequences up to 128K tokens efficiently, maintaining quality while drastically reducing computation compared to full-attention models. These methods promise scalable long-context reasoning in future large language models.

Software Engineering and AI Development Practices

The AI software landscape is shifting toward agent-assisted development where coding, debugging, testing, and deployment increasingly leverage AI partners. Claude Code and related tools have become integral not just for development teams but across organizational roles, from finance to user research. Observability tools like Honeycomb integrated with AI offer in-IDE insights that reduce the need for context switching.

Moreover, AI-enhanced continuous integration, experiment tracking, and environment setups are being centralized and automated, as exemplified by projects like Neptune’s migration to Lightning AI. Open tools like pre-commit implementations in Rust (prek) and fully open-source voice cloning pipelines empower developers with lightweight, efficient workflows.

Industry and Community Trends

The AI ecosystem in 2025 and early 2026 shows fast growth with new IPOs, open-source leadership, and increasing contributions from Chinese and global research institutions extending beyond text into multimodal and robotics fields. The enterprise adoption of AI copilots is growing, yet actual daily usage remains variable, underscoring the need for better distribution and interface models such as Claude Cowork’s native desktop approach.

NVIDIA’s announcements at CES 2026 introduced a new six-chip AI platform “NVIDIA Rubin,” advancing GPU infrastructure and physical AI applications including robotics and autonomous vehicles. Qualcomm highlights AI’s role as interconnected, benefiting people by automating tedious tasks. Meta and Google continue to push boundaries with their AI models and collaborative labs for drug discovery and biotechnology.

Efforts to democratize AI development also include accelerated learning projects teaching foundational concepts from tokenization to finetuning and quantization, as well as practical guides on building AI products and workflows.

Summary

The current AI landscape is marked by rapid evolution in agent systems, AI model capabilities, and workflows that blur the line between technical and non-technical users. New interfaces like Claude Cowork dramatically improve AI accessibility for real-world tasks, while advanced models in medical imaging, speech synthesis, and video generation extend AI’s practical impact. Integrations with existing software infrastructure enhance productivity and observability. Cutting-edge research in robotics, world models, and efficient long-context processing heralds advances toward more generalized intelligence. Meanwhile, community growth and innovative startups continue to fuel a vibrant and expanding AI ecosystem.