The latest advancements across AI, robotics, and related technologies have marked a significant momentum in late 2025 and early 2026, with several key developments spotlighted by industry experts and organizations.

MiniMax M2.1 Release and AI Coding Models

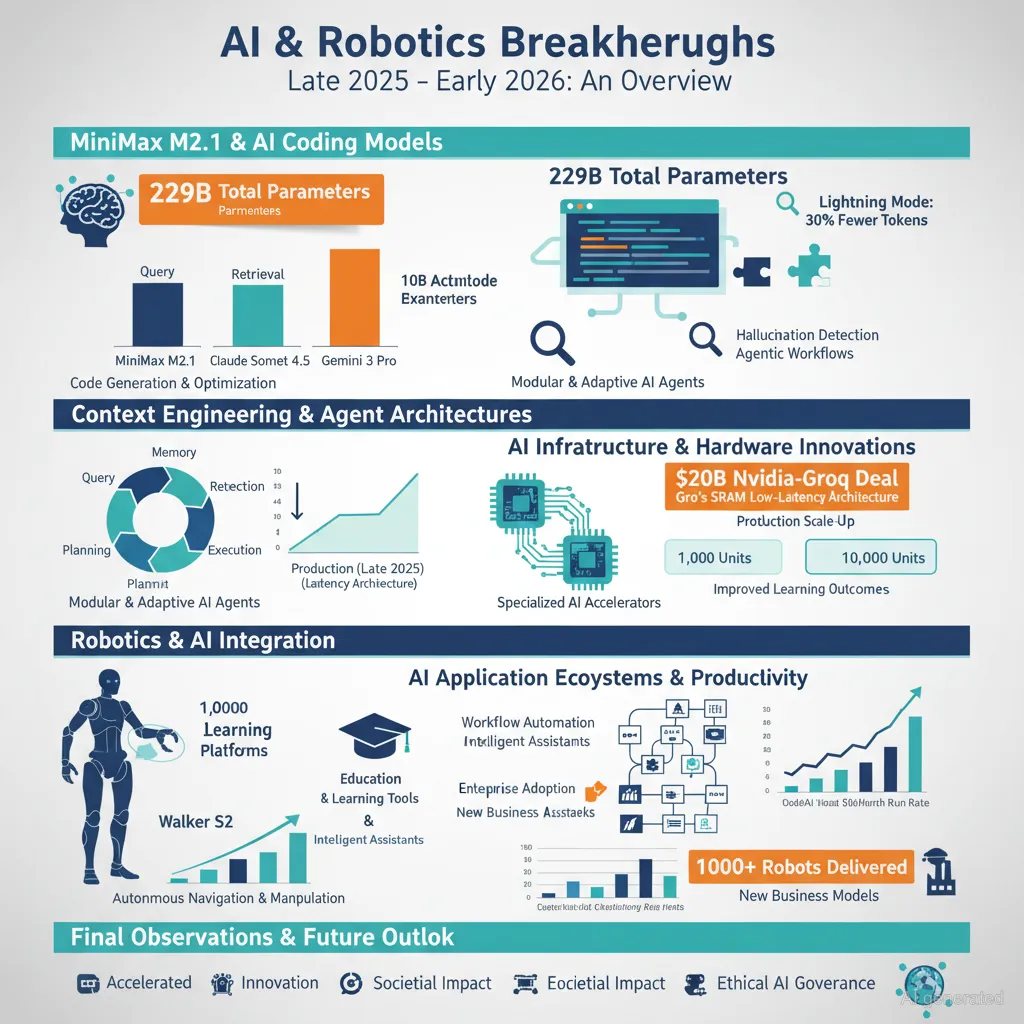

MiniMax-M2.1 has been released as an open-source, agent-native design model with 229 billion total parameters and 10 billion activated ones, under a modified MIT license. This model outperforms competitors like Claude Sonnet 4.5 and Gemini 3 Pro on multilingual coding benchmarks such as SWE-Multilingual and VIBE-Bench. It supports full-stack app development including Android and iOS platforms and integrates well with various coding frameworks like Cursor, Droid, and BlackBox. The model emphasizes efficient computation with 30% fewer tokens consumed, faster inference, and offers a “lightning mode” for high-throughput workflows. It also incorporates interleaved thinking for adaptive planning and error correction during complex tasks, and provides stable performance in agent workflows and context management systems.

Comparative evaluations have shown MiniMax-M2.1 reaches usable results faster and with fewer corrections than Claude Sonnet 4.5 when running identical prompts and context. Benchmark improvements indicate a shift towards agentic design in AI programming, signaling that code agents and agent-level tool use have become mainstream. This era emphasizes “outcome-oriented” AI, moving away from traditional seat-based software licensing towards Results-as-a-Service models exemplified by enterprises like China’s Bairong.

Context Engineering and AI Agent Architectures

Context engineering has emerged as a critical, though often underestimated, skill for effective AI development. It involves the strategic design of information flow into large language models (LLMs), focusing on six core components: query augmentation, retrieval, memory, agents, external tools, and prompting. Proper context engineering addresses challenges like context window limitations and confusion, enabling agents to coordinate complex workflows intelligently.

Complementary efforts have introduced self-improving agentic models using reinforcement learning techniques that allow agents to learn reusable skills from their past interactions, significantly improving task efficiency and reducing redundant outputs. These self-play methodologies represent a paradigm shift akin to AlphaGo’s emergence in gaming, allowing models to generate their own training curriculum without requiring human-curated datasets.

In parallel, advances in causal modeling have enabled LLMs to internally build causal graphs and perform counterfactual reasoning, surpassing conventional causal discovery algorithms. This capability could redefine causal inference across scientific, economic, and policy disciplines.

AI Infrastructure and Hardware Innovations

Nvidia’s strategic $20 billion licensing and hiring deal with Groq highlights the importance of optimized AI inference hardware. Groq’s architecture, based on SRAM rather than traditional GPU memory types like HBM, achieves extremely low-latency token decoding, crucial for real-time AI applications such as agentic reasoning. Nvidia plans to utilize a mix of specialized chips to optimize different inference stages, balancing memory capacity and speed for prefill and decode processes.

Simultaneously, breakthroughs in hybrid attention architectures such as Block-Diffusion significantly enhance the efficiency of diffusion language models by reducing the computational complexity of decoding, enabling scalable and efficient execution of long-context or multimodal generative tasks.

Robotics and AI Integration

Robotics has advanced with tangible industrial deployments, with over 1,000 units of the Walker S2 humanoid robot produced and more than 500 delivered for active use, targeting a capacity scaling to 10,000 units by 2026. Innovations like MIT’s loop closure grasping mechanism enable robotic manipulators to gently but securely handle heavy and fragile objects. Demonstrations of physical AI include humanoid robots performing synchronized movements on stage for entertainment venues, reflecting the integration of AI with real-world robotics.

AI-enabled platforms for gamified remote data collection in robotics (e.g., RoboCade) are making the process more scalable and engaging, addressing traditional data bottlenecks in robotic learning.

Education, Knowledge, and Learning Tools

Notable educational initiatives include Stanford’s full AI/ML curriculum made available for free on YouTube, providing direct access to lectures that shape frontier AI research. Google also introduced “Learn Your Way,” an AI-powered personalized learning tool that reshapes textbook content based on individual interests and grade levels, thereby enhancing retention and engagement.

Furthermore, the conceptual breakthrough of an AI-generated, verified long chain-of-thought knowledge base dubbed SciencePedia utilizes multiple independent LLMs to generate and cross-validate first-principles reasoning chains across scientific domains, reducing hallucinations and increasing the density of reliable knowledge.

AI Application Ecosystems and Productivity Tools

The ecosystem of AI tools continues to expand rapidly, with platforms like Dify offering visual and code-backed agent workflow management, and the release of diverse open-source libraries and client SDKs supporting multiple programming languages such as C# and Java.

Meanwhile, AI-enhanced products improve content creation, business automation, marketing, and SaaS feature adoption insights. Examples include AI video avatar generation at scale, voice control for consistent audio in video production, and automated compliance reporting tools targeting regulated industries with substantial market demand.

AI Models and Benchmarking

Open-source models show remarkable progress, with models like LFM2-2.6B-Exp outperforming larger competitors, and the GLM 4.7 model ascending in rankings. Research reveals that large causal models derived from LLMs better emulate human expert reasoning by decomposing complex problems into atomic sub-tasks, elevating efficiency and reliability.

FaithLens, an 8B parameter model, effectively detects and explains hallucinated claims within AI outputs, enabling practical, low-cost verification for applications requiring high factual fidelity.

The AI community is also focusing on training and inference workflows, as seen in projects like Mini-SGLang, which offers a readable, production-grade LLM serving stack demonstrating state-of-the-art inference techniques including FlashAttention-3 and efficient GPU utilization.

Market and Industry Insights

AI growth in user base and revenue is explosive, with OpenAI tripling its weekly active users in 12 months and hitting a $1 billion monthly run rate by October 2025. Meanwhile, competitors like Google’s Gemini are rapidly gaining market share through integration into widely used products, resulting in significant shifts in market dynamics.

The AI-driven surge has also contributed to impressive net worth gains for prominent tech founders and catalyzed unprecedented capital expenditures in AI hardware and data center investments worldwide.

Final Observations

The landscape of AI technologies and applications has accelerated beyond prior expectations, entering a phase characterized by agentic autonomy, continuous learning, and integration with real-world systems. Emphasis on context engineering, efficient inference, and model verification is shaping robust AI deployments.

Developers and organizations able to engage early with these evolving paradigms, leveraging foundational engineering best practices such as CI/CD, testing, and documentation, stand to gain significant productivity multipliers and redefine software development itself.

The year 2026 is anticipated to further this transformation, moving AI from assistive tools toward autonomous, reliable agents capable of complex, goal-driven workflows in diverse sectors.