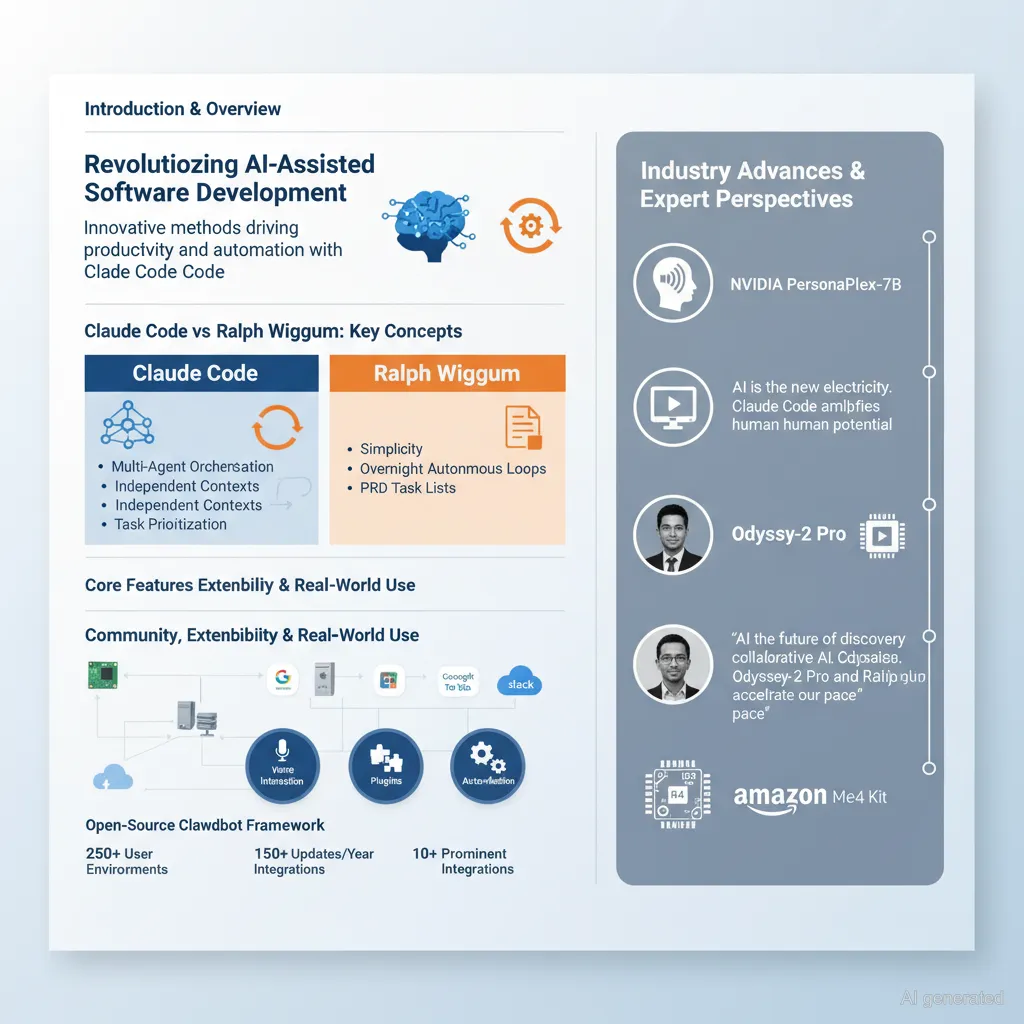

There has been significant buzz around Claude Code and the Ralph Wiggum technique, two innovative methods reshaping AI-assisted software development. Claude Code, powered by Anthropic’s advanced Opus 4.5 model, has garnered attention for its ability to boost productivity and enable users to build complex applications. Meanwhile, the Ralph Wiggum technique, developed by Geoffrey Huntley, offers a simpler loop-based approach that automates task execution overnight using scripts and configuration files. This method involves describing a project, generating a Product Requirements Document (PRD) with a task list, then allowing the AI to autonomously complete the work, requiring no user intervention. Both methods demonstrate the power of AI-driven development, with Ralph emphasizing simplicity and Claude Code focusing on scalability and efficiency.

A noteworthy evolution in Claude Code is its introduction of Task Agents, representing a significant advancement over the Ralph Wiggum loop. These Task Agents operate concurrently to manage different aspects of the development process: one oversees the chat user interface, another handles execution and timeline management, while others focus on settings, integrations, automated Playwright tests, and more. Each agent has an independent context window, preventing interference and enabling efficient long-running sessions, even overnight, without losing state. The system intelligently prioritizes tasks that block others, ensuring blockers are resolved first, which enhances overall workflow efficiency. This multi-agent setup is described as highly satisfying to observe, with 7-8 agents collaborating seamlessly.

Community engagement has played a crucial role in developing and popularizing these AI tools. A wide range of users, including those with little programming experience, have embraced Claude Code and the Ralph technique to build applications and automate workflows. Examples include using Claude Code (also known as Clawdbot) for app improvement workflows, task automation, code generation, and integration with communication tools like Google services, Microsoft To Do, and various messaging platforms. Users have reported setting up Clawdbot on diverse hardware environments such as Raspberry Pi, Mac Mini, Ubuntu VMs, and AWS Free Tier servers. The open-source nature and extensibility of these tools-with plugins, skills, and integrations-allow users to tailor workflows, automate routine tasks, and enhance productivity dramatically.

The open-source Clawdbot framework, in particular, has attracted wide acclaim for combining advanced AI capabilities with accessibility. It runs locally or on cloud hardware, offering full autonomy and privacy. It supports powerful multi-agent orchestration, voice interaction via WebRTC and speech recognition, and direct integration with various APIs and hooks. The system automates many typical development and management tasks, including creating pull requests, documenting work, cross-referencing test notes, and even managing home automation workflows. Ongoing updates (with frequent, substantial changelogs) continue to add features such as improved text-to-speech (TTS), Slack threading, iMessage support, and agent invocation APIs.

In parallel to these developments, the community emphasizes the critical role of tooling and ecosystem-building around AI models rather than solely focusing on improving raw model performance. There is a growing consensus that the future of AI lies in practical, production-ready tools that leverage existing powerful models like Opus 4.5 by Anthropic and local open-source alternatives. The concept of distributed multi-agent collaboration, recursive self-improvement, and seamless integration with user workflows is seen as transformative for software engineering.

Other noteworthy industry updates include the release of NVIDIA’s PersonaPlex-7B-a fully open-source voice AI model capable of real-time, uninterrupted conversational interaction-and advancements in video generation technology like Odyssey-2 Pro, which supports interactive, long-running simulations. Additionally, the Arduino Starter Kit R4 has been launched to facilitate entry into autonomous tech projects, and the broader AI ecosystem continues advancing with contributions from labs such as Google DeepMind, Meta AI, and Amazon, who are publishing roadmaps for large language model (LLM) agents with planning, tool use, memory, and collaboration capabilities.

Industry leaders and researchers underline the significance of integrating AI deeply into practical workflows. Andrew Ng underscores that future professionals must program and utilize AI tools to remain relevant, as coding and automation become essential skills across disciplines, including marketing and finance. Demis Hassabis of DeepMind envisions AI systems as instrumental in addressing societal challenges like climate change and disease.

In summary, the convergence of Claude Code’s multi-agent orchestration, the Ralph Wiggum technique’s automated looping, and community-driven plugin ecosystems represents a major shift toward autonomous, AI-powered development environments. These advancements promise to make sophisticated software creation accessible to a wider audience, increase productivity for experienced developers, and drive forward an era where AI systems continuously improve their own capabilities and assist humans in complex, real-world tasks.