The news roundup highlights significant progress and insights across AI research, product development, and industry transformations as 2026 unfolds.

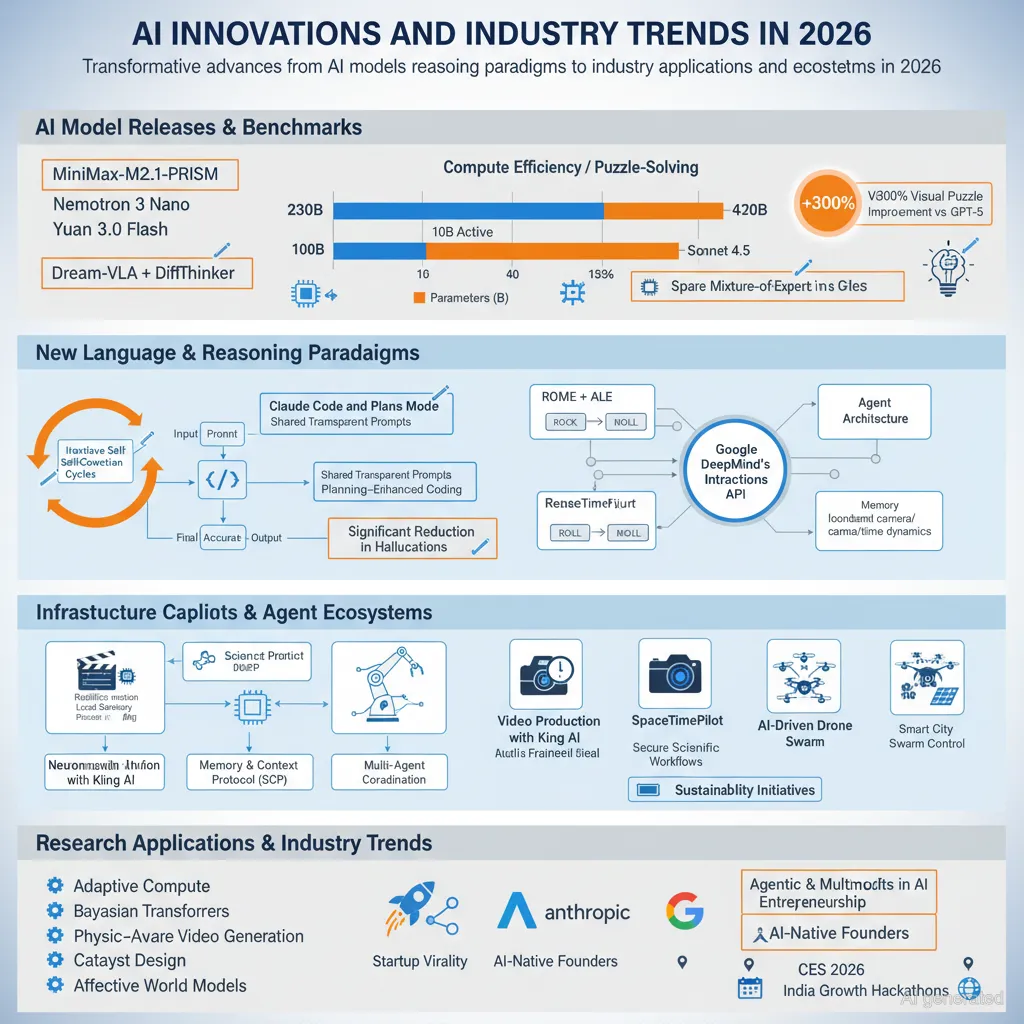

AI Model Releases and Benchmarks:

Several cutting-edge AI models were recently released or improved, often showing superior performance with more efficient compute use. Notably, MiniMax-M2.1-PRISM, a 230B-parameter model by MiniMax AI, is now available with 10B active parameters and no guardrails, running locally on standard hardware and outperforming Anthropic’s Sonnet 4.5. It features full capitalization and zero refusals, signaling a shift toward open, uncensored models in 2026. Nemotron 3 Nano also delivers sharp reasoning on low-end GPUs and CPUs.

Meanwhile, AI research teams presented new models and methods that challenge traditional scaling norms. For example, Yuan 3.0 Flash employs a sparse Mixture-of-Experts architecture activating only a fraction of parameters per inference, achieving higher intelligence without massive compute. Tencent and others unveiled agentic lightweight LLMs trained from scratch to reason, plan, and self-correct across long workflows, boosting bug-fix success rates significantly.

Major advancements in vision-language and multi-modal reasoning are evidenced by Dream-VLA and DiffThinker, the latter recasting multimodal reasoning as image-to-image diffusion generation to outperform GPT-5 by over 300% on visual puzzles, demonstrating the value of spatial and logical precision using diffusion models.

New Paradigms in Language Models and Reasoning:

Recursive Language Models (RLMs) are posited as the defining paradigm of 2026, enabling models to treat their own prompts as manipulable objects via code that calls LLMs, improving reasoning by iterative self-correction. Empirical studies confirm recursive passes enhance accuracy and reduce hallucinations more effectively than simply increasing model size. This aligns with OpenAI’s and others’ focus on agentic workflows, where AI agents learn to use tools gracefully, self-verify, and finish complex tasks robustly.

Also of note are innovations in prompting techniques and workflows. Claude Code exemplifies how shared, transparent prompts and verification loops improve coding quality and productivity. Plans mode in Claude Code enhances code quality and reduces errors by incorporating planning upfront.

AI Infrastructure, Agent Ecosystems, and Tooling:

The development of scalable agent architectures and ecosystems is accelerating. The ROME model and ALE ecosystem introduce infrastructures like ROCK and ROLL for sandboxed training and asynchronous rollout, supporting sophisticated agent learning and evaluation in open environments. Google’s DeepMind has launched the Interactions API to unify access to Gemini models and agents, enabling advanced agentic capabilities.

Memory and context are addressed through novel frameworks linking cognitive neuroscience to agent memory design, ensuring agents can remember and reuse information over long interactions, preventing context window overflow and noise accumulation.

Tool integration and multi-agent collaboration advance through new protocols like Science Context Protocol (SCP), which fosters secure, interoperable scientific workflows combining computational models, databases, and experiments to automate discovery.

Moreover, open-source projects like Claude Code and integrations with MCP and Opencode are enhancing developer experiences with multi-agent coordination, skill-based workflows, and environment automation.

Emerging Applications and Industry Trends:

Industries are witnessing rapid adoption of AI-powered creativity and automation. Kling AI showcases state-of-the-art realistic motion control in video production, while Adobe and Cambridge research introduce SpaceTimePilot, enabling AI-generated video frame rendering with independent control of camera motion and time dynamics.

In the consumer app space, the paradigm is shifting toward rapid, focused app creation optimized for viral engagement and iteration, supported by AI-driven UX/UI enhancements and workflow automation.

From robotics, biologically inspired neuromorphic robotic skin processes sensory input locally for immediate reflexes, improving responsiveness in humanoids. Meanwhile, large-scale coordinated drone shows demonstrate AI-driven swarm control, pointing toward future smart city applications.

Energy and sustainability also feature, with ADS TEC winning significant battery storage contracts and novel ideas like lunar solar farms under discussion as future clean energy solutions.

Research Highlights in Machine Learning and Scientific AI:

Multiple papers report breakthroughs on adaptive test-time compute, Bayesian reasoning in transformers, physics-aware text-to-video generation, and AI-driven catalyst design integrating thermodynamics and interpretability.

AI’s potential in scientific discovery is emphasized by work enabling language models to plan research independently, assessing their outputs against rubric-based grading extracted from published papers, thereby scaling scientific reasoning without human oversight.

Another trend is the integration of affective components in world models, recognizing that emotions are integral to understanding human behavior, not peripheral, enhancing AI’s modeling of social and emotional contexts.

Industry Movements and Cultural Insights:

Reports highlight how firms like Anthropic succeed by focusing on enterprise and coding-centric AI, fostering strong team cultures. Meanwhile, OpenAI and Google continue rapid iteration on models and tools, planning next-generation releases.

There is also cultural discussion around younger AI-native founders leveraging virality and AI as an operating system to circumvent traditional economic barriers, signaling generational shifts.

Events and Community:

CES 2026 approaches with Kling AI and others showcasing AI-driven innovation in creativity and storytelling. Growth hackathons in India demonstrate entrepreneurial energy in emerging markets, with real paying customers secured during events.

In summary, 2026 is shaping up as a transformative year advancing AI from single-shot models to iterative, agentic, and multimodal systems; from siloed research to open ecosystems; and from fundamental R&D to robust industry applications across coding, multimedia, robotics, and scientific discovery. The convergence of recursive reasoning, agent infrastructure, and extensive fine-tuning suggests AI will become more reliable, interactive, and embedded in workflows than ever before.