Overview of New Open-Source AI Models: GLM 4.7 and MiniMax M2.1

In less than 24 hours, two state-of-the-art open-source AI models have been released – GLM 4.7 by Chinese AI startup Zhipu AI and MiniMax M2.1. These models have notably narrowed the performance gap with large closed-source labs, especially in coding and general reasoning tasks. Both models deliver quality outputs comparable to proprietary models like Sonnet 4.5, GPT-5.1, and Claude 4.5, but at a fraction of the cost. This marks a significant democratization of advanced AI capabilities, allowing developers, researchers, and enterprises to self-host and build customized solutions efficiently.

China’s strategic approach is clear: shipping strong base models under open licenses facilitates startups and researchers to fine-tune, deploy, and innovate rapidly on top of these foundations. GLM 4.7 is released under an MIT License and is freely accessible, offering a powerful alternative to premium-priced closed-source models.

—

Technical and Performance Highlights of GLM 4.7

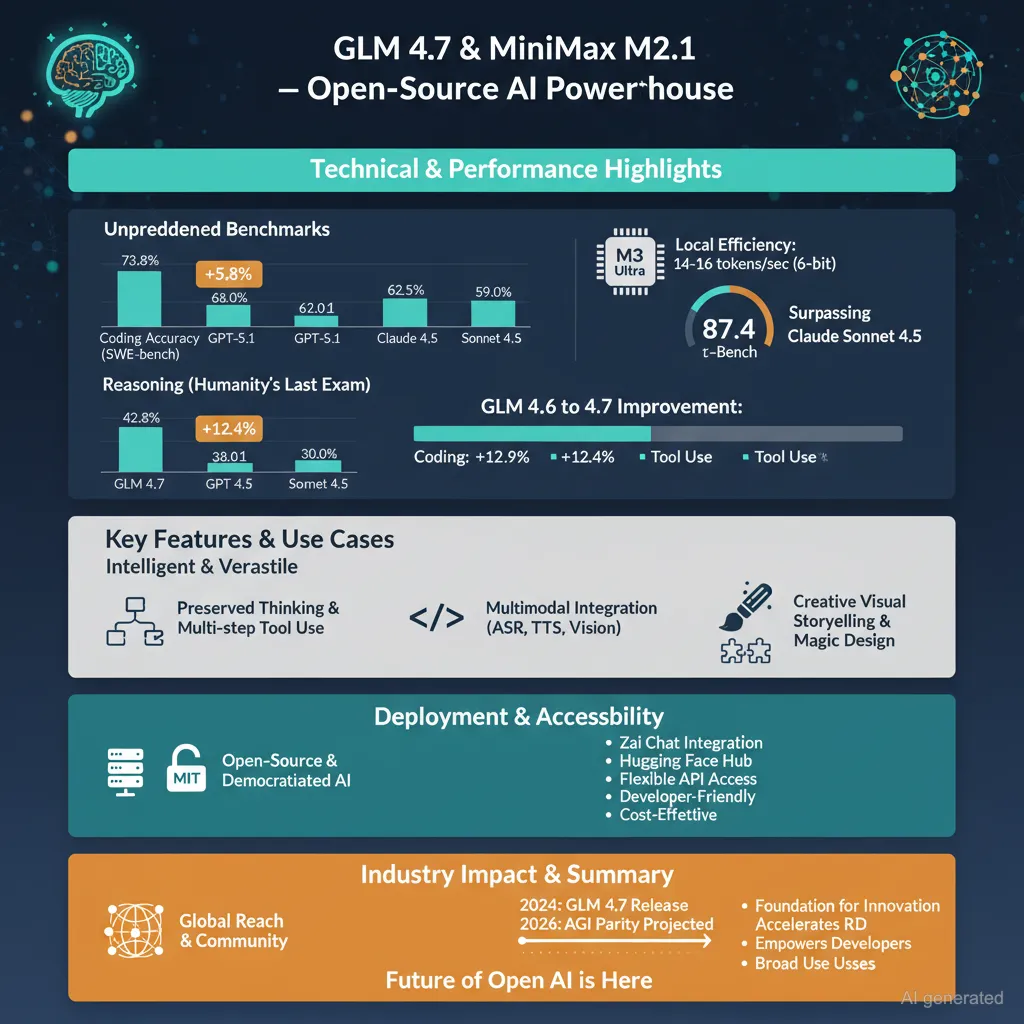

GLM 4.7 stands out with numerous improvements over its predecessor GLM 4.6:

– Coding Performance: Achieves 73.8% accuracy on SWE-bench (up 5.8%), 66.7% on SWE-bench Multilingual (+12.9%), and 41% on Terminal Bench 2.0 (+16.5%). It matches or surpasses benchmarks typically dominated by proprietary models such as GPT-5.1 and Claude 4.5.

– Reasoning Ability: Shows substantial gains in complex reasoning tasks with 42.8% on the Humanity’s Last Exam (HLE) benchmark, representing a +12.4% improvement over GLM 4.6.

– Tool Use & Agent Capabilities: Demonstrates robust multi-step tool usage in interactive workflows, reflected by an 87.4 score on τ2-Bench, outperforming Claude Sonnet 4.5. Its long-context memory and preserved thinking mechanism allow it to plan, reason, and execute with coherence over long conversations.

– Multimodal Integration: Utilizes a “Skill” architecture that orchestrates Automatic Speech Recognition (ASR), Text-to-Speech (TTS), Vision (VLM), and the large language model (LLM) in real time, enabling simultaneous seeing, hearing, speaking, and reasoning.

– Efficiency & Scale: Capable of handling up to 200K tokens of context and 128K tokens output, suitable for processing very large codebases and complex tasks.

Benchmarks show it runs efficiently on hardware such as Apple’s M3 Ultra chip, with tests at near-lossless 6-bit precision reaching 14-16 tokens per second locally.

—

Key Features and Use Cases

– Preserved Thinking: Unlike many AIs that forget their reasoning once a conversation progresses, GLM 4.7 remembers its thought process across multiple steps and retains it as a hidden state, ideal for complex, multi-turn workflows including coding and app design.

– Vibe Coding and UI Quality: Delivers code that is not only functional but aesthetically refined. The model excels in creating clean, modern-looking web pages, slides, and posters with intelligent layout, balanced fonts, consistent color harmony, and adherence to formats such as 16:9 presentations (accuracy improved from 52% to 91%).

– Interactive and Production-Ready Outputs: Moves beyond static code generation to produce fully clickable, responsive interfaces where logic, UI, and events remain connected end-to-end. This makes it suitable for building real applications directly from prompts rather than mockups.

– Magic Design for Visual Storytelling: It masterfully blends diverse artistic styles (Y2K, anime, retro, cinematic) for social media posters, year-end recaps, and curated product visuals that stand out with expressive, well-structured design without manual tweaks.

– Long-Context Handling & Tool Calls: Demonstrates outstanding ability in executing multiple tool calls efficiently within a single session, enabling complex automation like robotic food delivery simulation and infinite token recall above 190K context length with recall accuracy up to 95%.

Such capabilities position GLM 4.7 as a comprehensive AI copilot for software development, research, content creation, and creative storytelling.

—

Deployment and Accessibility

– The GLM 4.7 model is openly accessible for free via Zai chat (chat.z.ai).

– It can be run locally through platforms like Hugging Face or integrated via APIs (e.g., OpenRouter).

– Developers and enterprises can utilize the open weights for fine-tuning and deployment on private infrastructure, reducing costs dramatically compared to closed-source offerings.

– Ongoing preparations include running the model on multiple M3 Ultra chips using advanced interconnects like RDMA over Thunderbolt to scale performance further.

– Complementary open-source tools such as Open-Orchestra extend the model’s vision capabilities, solving frontend development challenges by enabling it to “see” images.

—

Impact and Industry Significance

GLM 4.7’s release shakes up the AI industry by making cutting-edge coding and reasoning models broadly available and affordable. It closes the gap rapidly with giants such as OpenAI and Google in multiple domains-especially in agentic AI that executes tasks rather than just chatting.

This open-source breakthrough supports a shift from exclusive lab-driven AI toward community-driven, real-world evolution based on user feedback and practical needs. The lower cost combined with strong performance means that serious, agentic workflows previously reserved for closed ecosystems are now viable in open-source.

Simultaneously, MiniMax M2.1 also shows promise, matching Sonnet 4.5 level performance in many coding and reasoning benchmarks, and fueling innovation without licensing burdens.

The future outlook suggests potentially full feature-parity or better among open-source models by mid to late 2026.

—

Summary

– GLM 4.7 is a revolutionary open-weight AI model rivaling closed-source giants in coding, reasoning, and tool use.

– It excels in complex, multi-modal, and multi-task workflows powered by a unique preserved thinking architecture.

– The model produces interactive, production-ready outputs, including visually refined UI and presentations.

– Released under an MIT license, it democratises advanced AI capabilities at dramatically lower cost.

– Developers, creators, and researchers are encouraged to explore GLM 4.7 for building real applications, interactive workflows, and rich visual content.

– Alongside MiniMax M2.1, GLM 4.7 signals a new era of accessible, high-performance open-source AI that can compete on the frontier of innovation.

For more details and to try GLM 4.7:

https://chat.z.ai

https://huggingface.co/zai-org/GLM-4.7

https://z.ai/blog/glm-4.7